How test UI elements / get ServiceLoader working?

-

In order to support pull request #8947 I want to make the casualty selection UI testable.

Calling UiContext.setDefaultMapDir from a new unit test causes a call to java.util.ServiceLoader for org.triplea.game.ApplicationContext.

This call fails.This test will be interactive and is not meant to be included in a normal background test run. Is there a better context for my UI test code?

If not: What do I need to do so java.util.ServiceLoader successfully loads org.triplea.game.ApplicationContext.

Thanks in advance

Rai -

@rainova I don't know if I'm fully clear on the goal. Is the goal to use Junit to launch a UI component? Could you do the same with a

mainmethod? -

-

It's been a while, so what I say may no longer be accurate, but I'm happy to try to help ...

Let me begin by saying, ideally, tests should never hit a

ServiceLoadercall becauseServiceLoaderis a very simplistic DI framework that isn't really test-friendly because it doesn't allow programmatic registration of services. I'm pretty sure we originally strove to make allServiceLoadercalls frommain(because that method should have never been called from a test) and then injected the dependencies downstream from there using either constructor or setter injection.With that being said, if you are hitting a

ServiceLoadercall from your test, it probably means a call toServiceLoaderwas added someplace outside ofmain(possibly for good reasons at the time). My approach would be to identify where thatServiceLoadercall is and either- pull the dependency on

ApplicationContextupstream as far as you need and inject a test-specificApplicationContextfrom there via ctor/setter injection; - if extracting the

ApplicationContextdependency is simply too complex, add a test-specific overload on the type in question, if possible, that allows your fixture to inject anApplicationContextbefore the test begins while leaving the default method in place for production code to continue to use; - if the call site can handle no

ApplicationContextbeing available (unlikely but possible), replace the call toServices#loadAnywithServices#tryLoadAnyand deal with theOptionalas needed; or - if all else fails, you could try adding some helper methods to the

Servicesfacade (which allServiceLoadercalls should be going through) that allow you to programmatically register/unregister services during fixture setup/teardown; the complexity here is thatServicesis just a collection of static methods and adding thread-safe state to it without converting it to a proper singleton is going to be less than satisfactory.

It would help to know the exact location where the problematic call to

ServiceLoaderis coming from. @RaiNova, could you identify that please? Given that your test is callingUiContext#setDefaultMapDir, I'm guessing it may beClientFileSystemHelper#getCodeSourceFolder. - pull the dependency on

-

In order to start the dialog I want to test (CasualtySelection), I need to activate functionality that is unavailable in the test environment. So I now use a regular main method (@LaFayette Thanks for the advice).

@ssoloff ,@LaFayette or whoever may want to comment on this: Please let me know, if my solution is acceptable:

Since my UI test needs pretty much the same setup like HeadedGameRunner, I start it by passing the new command line parameter-interactiveTest TestClass

to HeadedGameRunner.main

If that parameter is provided, my version of HeadedGameRunner.main does not end by calling GameRunner.start but by calling my interactive test.

In which folder shall I place the test class? Under which name? (Currently: same folder as the tested class, i.e. games/strategy/triplea/ui under the name CasualtySelectionInteractiveTest.java)

(Details can be sorted when I submit the pull request, but I would like to follow the right fundamental principles right now.)

-

@rainova, I'll let @lafayette decide if he wants to add test-specific code (the new command-line parameter and associated processing) to the production

HeadedGameRunner. If that approach is not acceptable, you can package up your test-specificmainentry point into a new project to keep the test and production code separate. That would look something like the following (in order to deal with theServiceLoaderissue):- Create a new project for your runner parallel to <repo>/game-app/game-headed named

game-headed-test. - Create a

HeadedGameTestRunnerclass to host your newmainentry point. - Create a

HeadedGameTestApplicationContextclass parallel toHeadedGameTestRunner. The implementation ofgetMainClassshould returnHeadedGameTestRunner.class. - Create src/main/resources/META-INF/services/org.triplea.game.ApplicationContext in your new project. This file should simply contain the fully-qualified name of your

HeadedGameTestApplicationContextclass (e.g.org.triplea.game.client.test.HeadedGameTestApplicationContext) - Create an empty file named .triplea-root at the root of your new project.

- Create a new IDEA run configuration for

HeadedGameTestRunner#mainin <repo>/.idea/runConfigurations/HeadedGameTestRunner.xml and configure it appropriately (see the corresponding run configuration in HeadedGameRunner.xml)

@lafayette will probably have better suggestions for naming packages and types.

The only drawback of having a separate project is you may end up duplicating a lot of code from

HeaderGameRunnerin your own runner in order to prepare the test environment. If that turns out to be the case, you could investigate having your new project depend on thegame-headedproject and expose the shared code you need. But I'd wait to see just how much code is actually duplicated between the two. I suspect your test runner can exclude a lot of stuff that is required by the production client (e.g. game notes migrator). - Create a new project for your runner parallel to <repo>/game-app/game-headed named

-

TBH, it seems we are discussing potentially a lot of test scaffolding. Decomposing into slightly smaller units might help.

Dependencies can potentially be converted to be an interface that are then injected. EG:

SomeStaticCall.run()intoRunnable r, and then inject the Runnable as a parameter.Backing up a bit though, 'view-model' might be a good pattern here. In essence make a model that represents an idealized and simplified version of the UI. A 'string' would represent for example the selection in a drop down. Then all logic can be built into this 'view-model' class and the UI is super simplified to send all events to the 'view-model' and to query the 'view-model' for all data. The 'view-model' should then generally be readily testable.

-

TBH, it seems we are discussing potentially a lot of test scaffolding. Decomposing into slightly smaller units might help.

Yes. Let's keep it simple please: My professional programming experience is 20 years old, and I am not really proficient with today's complex build configurations.Dependencies can potentially be converted to be an interface that are then injected. EG: SomeStaticCall.run() into Runnable r, and then inject the Runnable as a parameter.

Do you mean something like this:

public final class HeadedGameRunner { // ... /** Entry point for running a new headed game client. */ public static void main(final String[] args) { final List<String> argsList = Arrays.asList(args); final int iInteractiveTest = argsList.indexOf("-interactiveTest"); final String[] normalArgs = iInteractiveTest<0 ? args : withoutElementsAtAndAfter(args,iInteractiveTest); checkNotNull(normalArgs); // and the rest of the setup code, using normalArgs instead of args, but otherwise unchanged if (iInteractiveTest < 0) { log.info("Launching game, version: {} ", Injections.getInstance().getEngineVersion()); GameRunner.start(); } else { final String nameOfInteractiveTestClass = args[iInteractiveTest+1]; try { final Runnable interactiveTestClass = Class.forName(nameOfInteractiveTestClass) .asSubclass(Runnable.class) .getDeclaredConstructor() .newInstance(); interactiveTestClass.run(); } catch (Exception e) { log.error(e.toString()); } } } }That's my current code. I also tried moving the setup code into a public method and then calling it from my test class. IntelliJ wouldn't let me do that, telling me it would create a circular dependency. I'm happy to go that path, but I'd need advice.

Backing up a bit though, 'view-model' might be a good pattern here. In essence make a model that represents an idealized and simplified version of the UI. A 'string' would represent for example the selection in a drop down. Then all logic can be built into this 'view-model' class and the UI is super simplified to send all events to the 'view-model' and to query the 'view-model' for all data. The 'view-model' should then generally be readily testable

Modularisation of the UI code would certainly help. Setting up a test framework seems to me to be a best practice start for that.

-

@rainova Can we step back a bit. How do you mean by test framework? What kind of UI testing are we talking about? Would a physical UI be launched and then clicked? Would this be for just sample windows that are more for isolated development/testing?

-

@lafayette What kind of UI testing are we talking about?

Would a physical UI be launched and then clicked?A physical UI would be launched. Currently it seems to be sufficient to click it via

JButton.doClick. Reading pixels from thejava.awt.Components (e.g. by having them paint intoBufferedImages) may also suffice, so I don't see any need forjava.awt.RobotWould this be for just sample windows that are more for isolated development/testing?

My imminent need is to quickly start the

CasualtySelectiondialog so I can manually test my code in a quick and focused fashion.I'd also like to automate some UI testing, e.g.

-

(a) making sure that the

UnitChoosershows weaker units above stronger units and -

(b) making sure the non-withdrawable icon is shown in the right situations.

Other use cases may show up. Would it be advantageous to include such tests in the standard unit test run?

No test specific code in

HeadedGameRunneris necessary (I followed @ssoloff's advise with a new extension ofApplicationContextto be found by theServiceLoader. I did not set up an new project, because I want to stay consistent with the current code/design and I also want keep additional scaffolding minimal.)Apart from how to set up my test stuff the right way, one concern is bothering me:

When running a unit test, the working directory is not the source root ofgame-headed.

As a consequence, attempts to load ressource files would fail. I have solved this forResourceFileLoader.loadImageandEngineImageLoader.loadImageand my current UI tests run automativally.

However, some other methods in the overall code rely on the current working directory (e.g.ResourceFileLoader.loadImageAssetand the underlyingImageLoader.getImage).In order to stay focused on the casualty selection and for general stability reasons, I'd like to avoid changing image loading code as much as possible.

Is there a way to specity the working directory for unit tests - ideally also for the tests run by the central build system? -

-

@rainova Generally test code has had to deal with the root directory being either 'triplea' or being the subproject folder. There are differing contexts where it could be one or the other even.

UI testing is an interesting path, though there are some complications that should be thought through:

(1) The test runner for github PRs and branch builds is headless. Any rendering of UI components will result in a java.awt exception.

(2) Launching UI components during test can make the tests very slow.

(3) Testing UI components can be really brittle. Changes from a drop down to a text field, a label to to a text box, moving labels from one screen to another, can all break the tests even though the application is still working fine.

(4) UI testing can be inaccurate, "sure, this label has the right value, but it's covered by another component that makes it unreadable""Presentation Model" I think might be a good way to solve this: https://martinfowler.com/eaaDev/PresentationModel.html

The presentation model can be fully tested. Once you have that, the UI has trivial bindings between the presentation model and the UI. Those bindings can be verified by hand.

Here is an example:

interface DownloadMapsUi { void startMapDownload(String) void removeMapDownload(String) } class DownloadMapsModel{ // notice how the UI interface is injected and can be mocked. @Setter DownloadMapsUi downloadMapsUi; @Getter List<String> mapsAvailableToDownload; DownloadCoordinator downloadCoordinator; void startDownload(String map) { startDownloadEvent(map) .onComplete(map -> downloadMapsUI.removeMapDownload(map)); } } // Then the UI class class DownloadMapsSwingUi implements DownloadMapsUI { private final DownloadMapModel model; DownloadMapsSwingUi(DownloadMapModel model) { this.model = model; ComboBox<String> mapDownloads = new CombBox<>(model.getMapsAvailableToDownload()); : mapDownloads.addEventListener(onClickMap -> model.startDownload(onClickMap); } }There is an example of this with the PBEM and PBF screens. It's not the best example because the UI invokes the model to often on every key press. But otherwise fits the pattern and the model classes are fully tested (which found a number of interesting logic bugs in the process).

- https://github.com/triplea-game/triplea/blob/master/game-app/game-core/src/main/java/games/strategy/engine/framework/startup/ui/posted/game/pbf/ForumPosterEditorViewModel.java

- https://github.com/triplea-game/triplea/blob/master/game-app/game-core/src/main/java/games/strategy/engine/framework/startup/ui/posted/game/pbem/EmailSenderEditorViewModel.java

- https://github.com/triplea-game/triplea/blob/master/game-app/game-core/src/test/java/games/strategy/engine/framework/startup/ui/posted/game/pbf/ForumPosterEditorViewModelTest.java

- https://github.com/triplea-game/triplea/blob/master/game-app/game-core/src/test/java/games/strategy/engine/framework/startup/ui/posted/game/pbem/EmailSenderEditorViewModelTest.java

It's a very major effort to refactor the UI classes to have a clean split on their model and UI rendering, though the before and after speaks to the split having a positive effect. The UI code gets a lot simpler and the logic completely removed, the UI code becomes nearly perfectly linear and the logic is isolated and fully testable.

IMO we probably should do this kind of refactoring for just about all UI windows.

Another point to consider, a number of UIs are ripe to be changed. If we invest a lot in UI testing to only change the UI, we may find ourselves overly coupled to UI tests rather than testing workflows and functionality. For example, when testing exception throwing code, it's often better to check that an exception was thrown and contains a key word, rather than an exception was thrown with specific text (and as soon as that text changes, perhaps to give more detail, suddenly the test is now invalid an is throwing a failure even though the application code is working fine).

WDYT?

-

@lafayette WDYT?

PresenterModel looks like an interesting design principle. If it would be a general design principle in triplea

and if I would write a new dialog, then I would probably implement a PresenterModel.What I am trying to do for triplea is much more limited:

- correcting the assignment of damage to air units with different movement points

- differentiating between units than can vs. can't withdraw in the casualty selecting dialog

- annotating the respective units in the dialog with a non-withdrawable icon

- making sure the casualty selection dialog shows therm in the right order

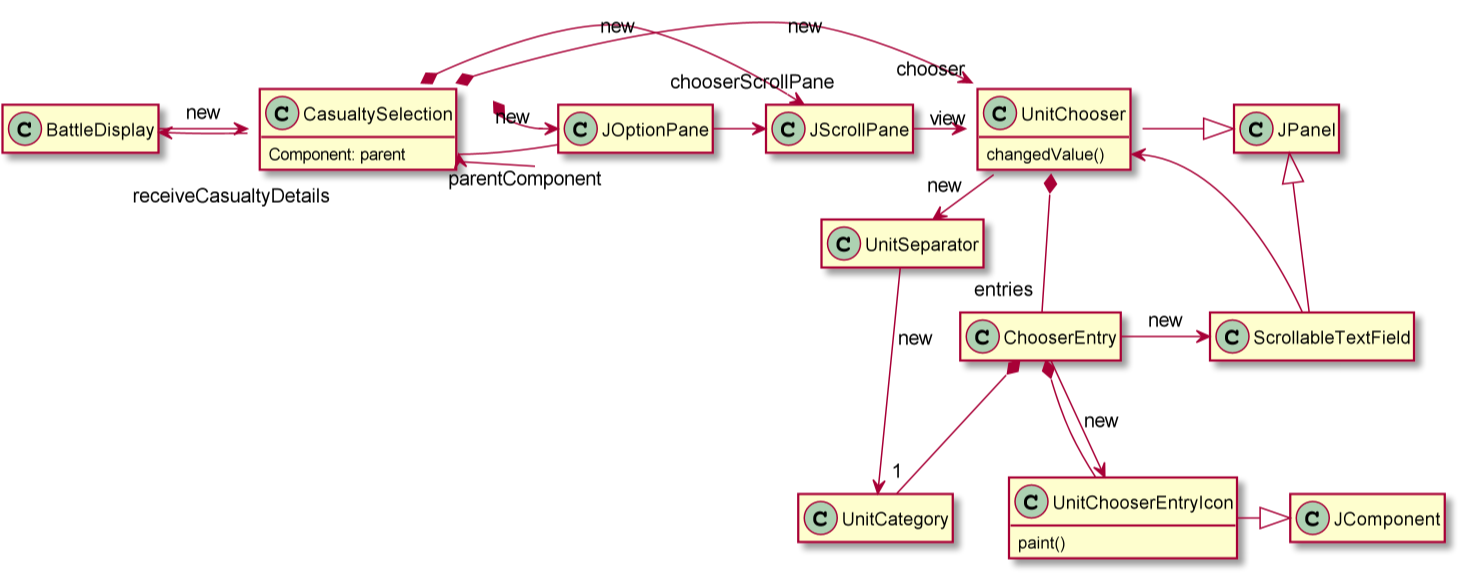

The diagram shows the most important classes in this context.

Redesigning CasualtySelection/UnitChooser would be a greater undertaking, which I try to avoid.

I‘m still writing automated test which include some aspects that - given the current design - can only be verified including the ui.I’ve been an IT project manager for many years, switched to the business side a few years ago and set up a test team (since I thought it was a necessity nobody else cared about). The reluctance to automated ui testing always intrigued me - so I‘m having a go at it myself now. And I am having a lot of fun with it

I agree with the complications of automated ui testing you mentioned, taking the brittleness as the most serious one.

My developer colleagues argue that the brittleness leads to expensive adaptation of the test code.In triplea, the greatest issue with brittleness is probably not efficiency but process: If another contributor changes

an implementation detail, I don’t want to cause her grief by a test that fails although functionality and ui are still correct.

This could be very confusing for her.How about this: I take the scenario "my ui tests fail, because another developer has changed an implementation detail" into

account by- writing tests that verify that my assumptions hold, so if they fail, the other developer will understand what happend

- try to address the right level of abstraction in my essential ui tests

- show you what I came up with, and we go on from there?

Cheers

RaiPS: Don't worry: If you end up rejecting to include my ui tests, I had a great time writing them, anyway

-

@rainova The diagram you posted is interesting and thank you for creating that. I think it shows pretty well how UI and logic concerns are hopelessly intermingled and that has gone on to cause a plethora of other problems.

I don't think it's possible to solve that tests can't be headed when run in CI. That results in tests that need to be manually run, in which case developers may never know that they are broken without adding additional steps to verifying changes.

Generally UI tests are expensive compared to their benefit. They are often very slow and gets a team into a situation where test execution goes from seconds or minutes to many dozen minutes (and your computer is flashing UIs while this is happening, so you can't even do any other work while you are waiting for tests to run).

Yet another problem is that UI tests are very much end-to-end and that can lead to the integration testing trap. How do you account for all the various scenarios that you can come across, how do you test for error scenarios?

I think the place for UI tests would be for "given a UI model -> does the UI render correctly?" That would then be totally divorced from all logic testing. If we do test the logic of the UI as part of UI tests, then changes to the logic would then break the UI tests. That is not a good level of isolation. Further though, if the logic is already tested by a UI tests, then we have disincentive to test the logic on its own. This means more and more logic becomes tested through UI tests (and UI tests being end-to-end tests are impossible to write enough of them to sufficiently cover the majority of all logic branches)

We had an initiative in TripleA where we wanted to rewrite the UI to use JavaFX. That sputtered out largely because the UI is just too coupled with too much logic and doing that UI update would involved a rewrite of most everything. So we really would prefer to have a presentation model going forward, and it is a lot of work to refactor our UIs to have that.

-

FWIW, you may want to check out Fest @RaiNova: https://tuhrig.de/automated-ui-testing-with-swing-fest/

That does not solve the problem that you still needed a headed system to display the UI components, but fest is a pretty decent testing framework for UI components, it's quite functional.

-

@lafayette JBrains says isolated tests are hard to write on badly designed code. Forbidding automated integration tests encourages good design. We could start a long discussion about that, and it would lead us far away from the question, whether the automated ui tests I write to verify my code does what it should do should be integrated in the junit test run.

Nearer to that question are:

a) should I improve the design while applying my changes?

b) should someone else improve the design, before I apply my changes?

c) how great is the need to improve the design in triplea?

d) how can we get an overview of the most important design issues?

e) how could the respective issue be fixed?

f) what can / should we do to prevent the issue from manifesting again in an open source project?

g) how much effort is in e) and f)

h) what should we do next regarding triplea design?a) I see more fun things I can do with triplea. If I would go into improving triplea design, I would not start middle out (introducing a particular design pattern in the specific piece of code I happened to work on for other reasons) but bottom up (fixing one particular issue I feel,like, e.g. replace the callback from the casualty selection code to disable the button that started it by making the casualty selection dialog behave like a normal modal dialog, or consolidating file access or one of the other potentials I happened to come across) or top down (first answering the questions d-h in order).

b) if someone volunteers, please let me know so I wait until she's done and do my code changes on top

c) I‘m cool with the current quality. I doesn't slow me down too much. But if someone sees the need to improve the quality, I might happen to volunteer

d) how about starting a thread in the developer forum?

e-h) when we have an overview, I am happy to work on these questions

Thanks for the link to the test framework.I'll have a look

Cheers

Rai -

@rainova I had a different take-away from the JBrains talk. Notably that if you test end-to-end you cannot get complete coverage of all logic paths because there the logic paths combine in a combinatoral way. If you test each module individually, the number of logic path permutations becomes additive rather than combinatoral. He suggest though that end-to-end tests are still necessary, but are necessary to verify integration, not logic.

(A) I agree there are more fun things to do. On the other hand we have a track record of brittleness and needing to do a lot of manual testing.

(B) There is nobody else.

(C) In some places, pretty great. For example, you cannot change any of the the class variable name in any class that extendsGameDataComponent. Another example, the battle calculator copies the entire game data on load and this copy represents the significant majority of time that the AI uses to do move computations.

(D) Good question

(E) Good question

(F) Another good question, we have more static analysis checks in place now that help, and we do code reviews.(H) Have you read the TripleA 3.0 thread? https://forums.triplea-game.org/topic/2794/triplea-3-0-design-proposal-discussion/23

-

@LaFayette … the problem that you still needed a headed system to display the UI components

Does a linux server run the backend test? Can you equip it with a virtual screen as described in https://eclipsesource.com/blogs/2013/12/06/run-ui-tests-in-the-background-on-linux/ ?

-

@rainova Github actions (previously we used Travis) both run a within a container. The github actions are defined by each of the YML files in: https://github.com/triplea-game/triplea/tree/master/.github/workflows

I'm pretty sure, but not certain, that one can not connect a virtual screen.

-

@lafayette

Having no experience with build environments, I have to ask: Does this look promising

https://sick.codes/xfce-inside-docker-virtual-display-screen-inside-your-headless-container/

? -

@rainova Maybe, that link is a bit skimpy on detail. FWIW you could verify some of that yourself by running that docker container. You would need to do more to have tests run from within the container or somehow to forward to the virtual env.

Though, if you did manage to get a headless container running, there are some drawbacks:

- setting up yet another docker and one more built step is just one more thing

- what happens if a test fails? If the UI is headless, how to tell what was incorrect? What if the results from the virtual desktop do not agree with running without docker?